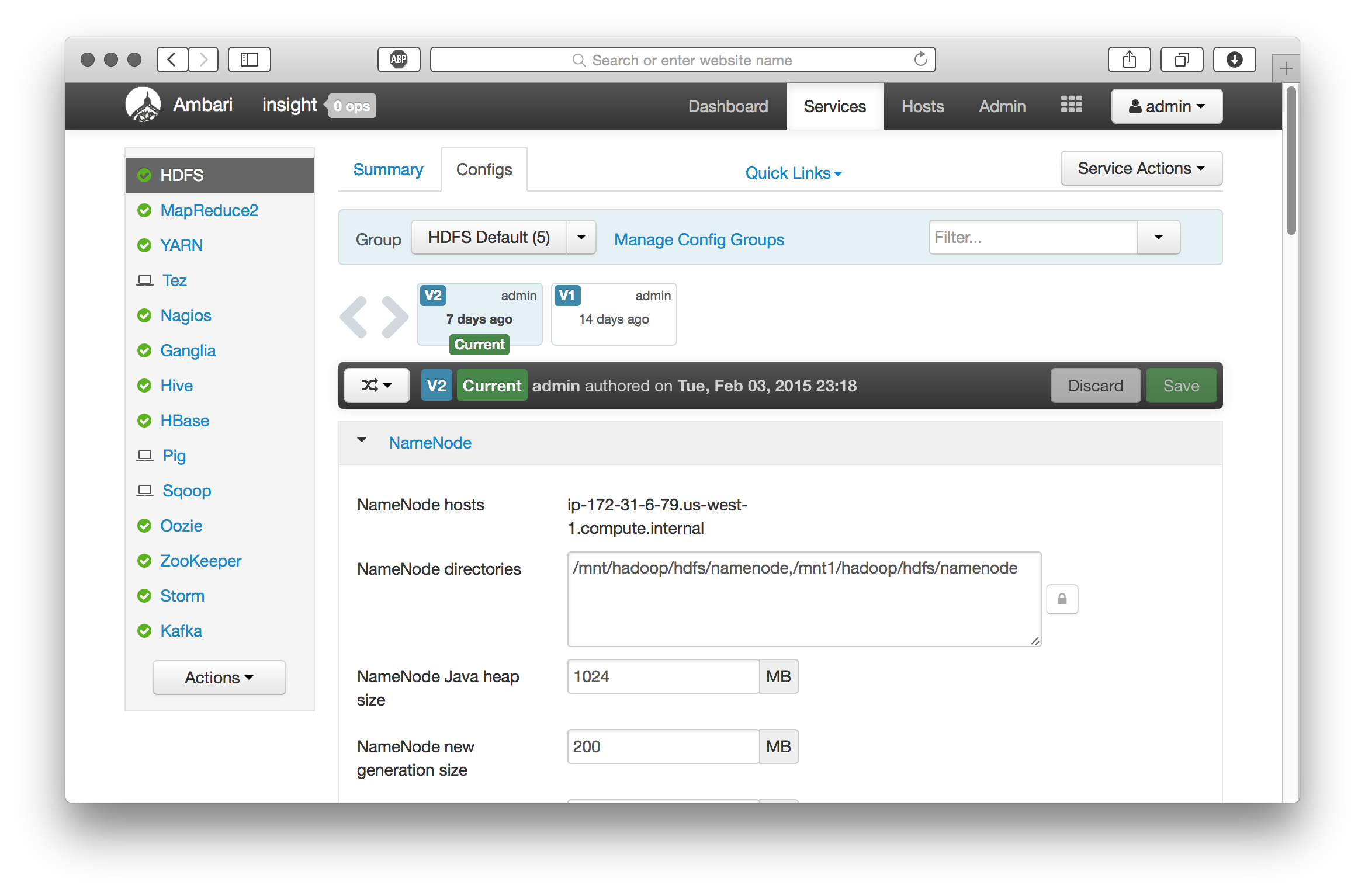

In this example we are assuming that the Hadoop servers will only be accessed from this localhost. Configure HadoopĪll the configuration files mentioned here will be in ~/work/hadoop/conf. You can update to later version just by downloading, untarring the distro and then just change the symbolic links.

Now you can have your tools all access ~/work/hadoop or ~/work/hbase and not care what version it is. But you can do this all in any directory that you can control.: cd ~/work I usually create a directory ~/work/pkgs and unpack the tar files there as numbered versions and then create symbolic links to them in ~/work. I am going to keep the distros in ~/work/pkgs. Click on those tar.gz files to download them. You can click on the stable link which will then bring you to a directory that has the latest stable Hadoop (as of this writing: hadoop-0.20.2.tar.gz) or HBase (as of this writing: hbase-0.20.3.tar.gz ). Once you click on the suggest link, it will bring you to a mirror with the recent releases.

Each of those links will bring you to a suggested link to a mirror for Hadoop or HBase. You can get a link to a mirror for Hadoop via the Hadoop Apache Mirror link and for Hbase at the HBase Apache Mirror link. You have to become a member but there is a free membership available.

#Hadoop download for mac os x for free#

You can get this for free from the Apple Mac Dev Center.

#Hadoop download for mac os x mac os x#

Mac OS X Xcode developer tools which includes Java 1.6.x. These instructions mainly follow the standard instructions for Apache Hadoop and Apache HBase Prerequisits Some folks actually create a set of Linux VMs with a full Hadoop/HBase stack and run that on the Mac, but that is a bit of overkill for now. This will be deployed as Psuedo-Distributed mode native to Mac OS X. So I thought it was time to revisit installing Hadoop and HBase on the Mac using the latest versions of everything. I just wanted a simple experimental environment on my Macbook Pro running Snow Leopard Mac OS X. I wanted to do some experimenting with various tools for doing Hadoop and HBase activities and didn’t want to have to bother making it work with our Cluster in the Cloud.

0 kommentar(er)

0 kommentar(er)